S3 for logs

Overview

AWS S3 is a service that enables to store and manage data with scalability, high availability, and low latency with high durability. AWS S3 can hold objects up to five Terabytes in size. Several AWS services offers to store their logs on a S3 bucket. This integration aims to collect line-oriented logs.

Configure

Deploying the Data Collection Architecture

This section will guide you through creating all the AWS resources needed to collect AWS logs. If you already have existing resources that you want to use, you may do so, but any potential issues or incompatibilities with this tutorial will be your responsibility.

Prerequisites

In order to set up the AWS architecture, you need an administator access to the Amazon console with the permissions to create and manage S3 buckets, SQS queues, S3 notifications and users.

To get started, click on the button below and fill the form on AWS to set up the required environment for Sekoia

You need to fill 4 inputs:

- Stack name - Name of the stack in CloudFormation (Name of the template)

- BucketName - Name of the S3 Bucket

- IAMUserName - Name of the dedicated user to access the S3 and SQS queue

- SQSName - Name of the SQS queue

Read the different pages and click on Next, then click on Submit.

You can follow the creation in the Events tab (it can take few minutes).

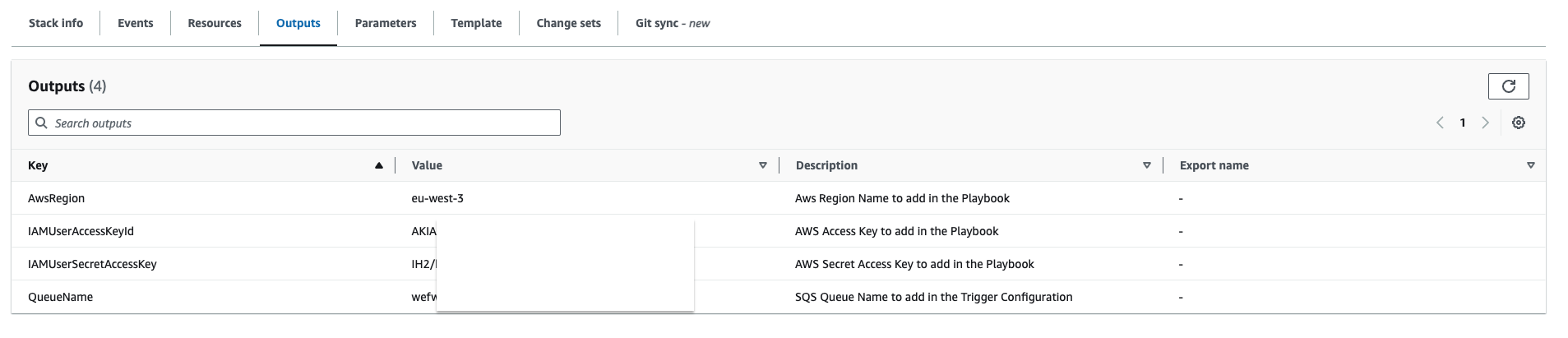

Once finished, it should be displayed on the left CREATE_COMPLETE. Click on the Outputs tab in order to retrieve the information needed for Sekoia playbook.

Create a S3 Bucket

Please refer to this guide to create a S3 Bucket.

Create a SQS queue

The collect will rely on S3 Event Notifications (SQS) to get new S3 objects.

- Create a queue in the SQS service by following this guide

- In the Access Policy step, choose the advanced configuration and adapt this configuration sample with your own SQS Amazon Resource Name (ARN) (the main change is the Service directive allowing S3 bucket access):

{ "Version": "2008-10-17", "Id": "__default_policy_ID", "Statement": [ { "Sid": "__owner_statement", "Effect": "Allow", "Principal": { "Service": "s3.amazonaws.com" }, "Action": "SQS:SendMessage", "Resource": "arn:aws:sqs:XXX:XXX" } ] }

Important

Keep in mind that you have to create the SQS queue in the same region as the S3 bucket you want to watch.

Create a S3 Event Notification

Use the following guide to create S3 Event Notification. Once created:

- Select the notification for object creation in the Event type section

- As the destination, choose the SQS service

- Select the queue you created in the previous section

Create the intake

Go to the intake page and create a new intake from the format that will process your logs.

Pull events

Go to the playbook page and create a new playbook with the AWS Fetch new logs on S3 connector.

Set up the module configuration with the AWS Access Key, the secret key and the region name. Set up the trigger configuration with the name of the SQS queue and the intake key, from the intake previously created.

Start the playbook and enjoy your events.